Coddling the Anxious Generation

Reflections on Haidt and Lukianoff

I’ve been reading and watching several sociologists and psychologists (mainly Jonathan Haidt, Et al.) about the affects of certain ideas on the behavior of the current generations of Western society, particularly its effects on Gen Z.

Haidt has identified three terrible ideas that have and continue to reshape our approach to protecting others from harm. Those three terrible ideas are:

Fragility: Whatever doesn’t kill you makes you weaker… which misunderstands that children are antifragile1

Many arrive at college with no driver's license, without ever having dated, tried alcohol, or had a job

Depression and anxiety triple on campus concurrent with Gen Z becoming undergrads (shows up in behavior, not just self-reporting data – tied to the arrival of smart phones and social media)

Emotional Reasoning: Always trust your feelings… which misunderstands that we are all prone to emotional reasoning and confirmation bias2

Us vs. Them: Life is a battle between good people and evil people… which misunderstands that we are all prone to tribalism and dichotomous thinking

Below are my conclusions, drawn from a serious read of Haidt’s The Coddling of the American Mind and The Anxious Generation, and several video presentations3 of the ideas contained in those books.

The argument that smart phones, social media and gaming help teach children and teens responsibility is a “that’s garbage” idea. Actually, they undermine the development of reality-based thinking, real-life responsibility, life skills development and the risk-taking necessary to achieve normal adulthood.

The argument that limiting access to phones, devices, social media and online gaming will create a repressed and ravenous appetite that will be unleashed once teens are independent, may be true for a small percentage of those teens, but by and large, limiting these things forces teens to engage in real life situations, which shape their thinking and habits differently, so that they reach independent adulthood with a set of convictions that allow them to competently charge forward into life.

The assumption that there is a silent contingent of fragile people that need mental and emotional protection creates a self-fulfilling cycle. In today’s climate, once someone assumes that those around them are fragile, they often engage in outspoken advocacy for the supposed silent contingent of fragile people, calling out any ideas or speech that they feel could potentially “harm” those fragile people. This in turn promotes actual fragility of mind and emotions, and becomes a self-fulfilling prophecy, creating the problem it proposed to correct. Protective bubbles protect indiscriminately, attempting to eliminate any risk or injury, which, over time, has the potential to create “the weakest generation”.

Parents need to be bold and firm, with clear-headed concern, setting parameters that will allow their children and teens the opportunity to develop habits that will actually prepare them for life in the real world, not for a virtual life. Parents who have acquiesced in the past may need to engage in practicing saying “no” on smaller things first, building resolve to say “no” about bigger, more critical things. Parents should make sure to communicate to their kids that these decisions are not punishment for doing something wrong, they are to ensure normal development is taking place so that the children and teens are prepared to take on life.

Parents need to avoid overreacting to teens and children based on real-life behavior that takes risks and sometimes does stupid stuff. Overreacting reinforces the idea of fragility in them, depriving them of confidence and competence, both of which come with experience, failure and sometimes even injury. If my parents can’t trust me, then I certainly can’t trust myself.

Teens (and their parents) who have become entrenched in wrong thinking and bad habits may need a CBT type process, to help them recognize distorted thinking and replace it with complex, integrated thinking, while developing the ability to clearly assess and shape habits around truth. As understanding is achieved, new habits must also be put in place, reinforced by parents, family and community, giving their teens a chance to correct their thinking and behavior prior to entering independent adulthood.

These three terrible ideas have permeated Western society due to the rise of, and acquiescence to, call-out culture which prioritizes feelings over common-sense, and if not corrected, threatens to prevent a large percentage of GenZ from becoming functional, normal adults, thereby jeopardizing the future strength of our society.

Two Additional Reflections outside the scope of what Haidt is addressing:

Evangelicalism bought into and promoted a form of these ideas from the 1950’s – 1980’s, and helped scale them up throughout the church in North America with rules-based activist movements (everything from the Moral Majority to purity/abstinence culture) which dictated how you dress, who you date, what you drink, and which political issues/candidates you rally around), resulting in a generation who could not function outside their protective, bureaucratically imposed bubbles. Many Christians who grew up in this era were either paralyzed by situations where these “rules” didn’t provide an obvious choice, or alternately were untethered from moral guidance altogether, choosing more chaotic, Bohemian alternatives.

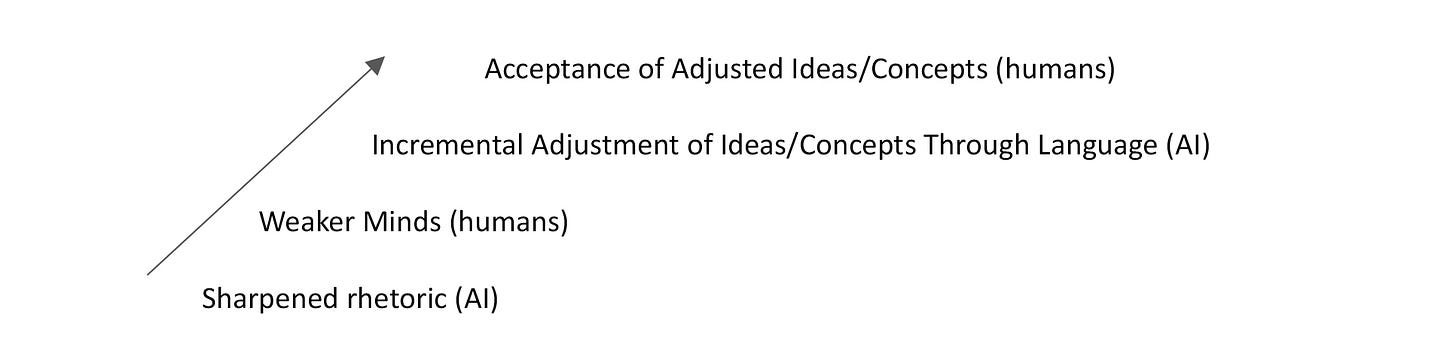

While I believe the main criticism of AI technology is accurate (relying on technology to articulate our ideas makes us dumber), I would posit that it’s potentially even more subversive than that. If AI becomes the standard for editing and sharpening the rhetorical language representing the ideas and writings of humans, then how many steps are there between AI edited terminology and AI adjusted meaning? In other words, is it possible that AI could, over time, subtly influence our thoughts and behavior in ways that are not intellectually considered? (We have already willingly traded in our time, privacy and reality-based relationships in exchange for smartphones and social media. Is AI just the next tradeoff in our willing aquiescence to being “re-educated”?) Either through how its outputs allow human beings to exploit one another exponentially, or via its own “alien intelligence” agenda which humans are unlikely to recognize right away and may have little control over later on. If AI ends up developing any human characteristics, then we know it will prioritize self-preservation, self-promotion and self-gratification. We always hope we are creating a Vision but can’t be certain we aren’t releasing an Ultron.

Antifragility is a new term (coined by Nassim Nicholas Taleb in his book, Antifragile) that represents the concept that it is critical for all humans, particularly children and teens, to be exposed to risk, failure, danger, and even injury, without which our minds and emotions atrophy, become weak, or never even develop the ability to deal with the difficulties of life. However, we do want some boundaries and protections in place to help prevent permanent injuries. As Haidt says, “We want to see bruises, not scars.”

Confirmation bias is a type of cognitive bias that favors information that confirms your previously existing beliefs or biases. – from the American Psychological Association. APA Dictionary of Psychology.

Thanks for paving an interesting path toward two books I've been meaning to read but haven't yet gotten to.

I tend to be wary of generalizations, and "generations" are no exception. Still, the unique circumstances surrounding this generation—such as the massive exposure to social media and cellphones—do seem to nudge people toward common behaviors that may strike earlier generations as odd, worrisome, or worse.

The points about AI deskilling are especially thought-provoking. As an AI power user, I've noticed that interacting with these tools can shape our expectations of others. AI tends to be reliably kind and eager to help (with notable exceptions like Grok "fun mode"), which might make relating to real people harder since humans aren't as consistent. One small clarification: the idea of algorithms "ruling" AI ("or those humans who control its algorithms") seems more relevant to social media platforms. Large language models, on the other hand, emerge from extensive training with minimal direct guidance. Developers often describe working with them as akin to interacting with an alien intelligence.

This is a fascinating topic, and I appreciate the insights you've shared!